Content scraping (aka web scraping, web harvesting, web data extraction, and so forth) is the process of copying data from a website. Content scrapers are the people or software that copy the data. Web scraping itself isn’t a bad thing. In fact, all web browsers are essentially content scrapers. There are many legitimate purposes for content scrapers like web indexing for search engines, for instance.

The real concern is whether the content scrapers on your site are harmful or not. Competitors may want to steal your content and publish it as their own. If you can distinguish between legitimate users and the bad guys, you have a much better chance of protecting yourself. This article explains the basics of web scraping, along with 7 ways you can protect your WordPress site.

Types of Content Scrapers

There are many different ways content scrapers go about downloading data. It helps to know the various methods and what technology they use. Methods range from low tech (a person manually copying and pasting), to sophisticated bots (automated software capable of simulating human activity within a web browser). Here’s a summary of what you may be up against:

- Spiders: Web crawling is a large part of how content scrapers work. A spider like Googlebot will start by crawling a single webpage, and go from link to link to download web pages.

- Shell Scripts: You can use the Linux Shell to create content scrapers with scripts like GNUs Wget to download content.

- HTML Scrapers: These are similar to shell scripts. This type of scraper is very common. It works by obtaining the HTML structure of a website to find data.

- Screenscrapers: A screen scraper is any program that captures data from a website by replicating the behavior of a human user that is using a computer to browse the internet.

- Human Copy: This is where a person manually copies content from your website. If you’ve ever published online, you may have noticed that plagiarism is rampant. After the initial flattery goes away, the reality that someone is profiting off your work sets in.

There are many ways to do the same thing. The categories of content scrapers listed above is by no means exhaustive. Additionally, there is lots of overlap between categories.

Tools Used by Content Scrapers

Image by medejaja / shutterstock.com

There are a variety of content scrapers available, as well as a variety of tools to help the web scraping process. Some expert organizations also exist that offer data extraction services. There is no shortage of tools content scrapers can use to get data. These tools are used by hobbyists, and professionals for a range of different purposes. Many times you can download a bundle full of tools like Beautiful Soup, a Python package for parsing HTML and XML documents. Below are a few tools commonly used by content scrapers.

- cURL: This is part of libcurl, a PHP library for making HTTP requests.

- HTTrack: A free and open source web crawler that downloads websites for offline browsing.

- GNU Wget: A tool for downloading content from servers via FTP, HTTPS, and HTTP. Get it free from GNUs website.

- Kantu: Free visual web automation software that automates tasks usually handled by humans such as filling out forms.

7 Ways to Protect Your WordPress Site from Content Scrapers

Image by 0beron / shutterstock.com

The administrator of a website can use various measures to stop or slow a bot. There are methods that websites use to thwart content scrapers, such as detecting and disallowing bots from viewing their pages. Below are 10 methods to protect your site from content scrapers.

1. Rate Limiting and Blocking

You can fight off a large portion of bots by detecting the problem first. It’s typical for an automated bot to spam your server with an unusually high number of requests. Rate limiting, as its name would suggest, limits the server requests coming in from an individual client by setting a rule.

You can do things like measure the milliseconds between requests. If it’s too fast for a human to have clicked that link after the initial page load, then you know it’s a bot. Subsequently block that IP address. You can block IP addresses based on a number of criteria including their country of origin.

2. Registration and Login

Registration and Login is a popular way to keep content safe from prying eyes. You can hamper the progress of bots that aren’t able to use computer imaging with these methods. Simply require registration and login for content you want only for your viewers. The basics of login security apply here. Keep in mind that pages that require registration and login will not be indexed by search engines.

3. Honeypots and Fake Data

In computer science, honeypots are virtual sting operations. You round up would-be attackers by setting traps with a honeypot, to detect traffic from content scrapers. There are an infinite number of ways to do this.

For example, you can add an invisible link on your webpage. Next create an algorithm that blocks the IP address of the client that clicked the link. More sophisticated honeypots can be tough to setup and maintain. The good news is that there are lots of open source honeypot projects out there. Checkout this large list of awesome honeypots on github.

4. Use a CAPTCHA

Captcha stands for Completely Automated Public Turing test to tell Computers and Humans Apart. Captchas can be annoying, but they are also useful. You can use one to block areas you suspect a bot may be interested in, such as an email button on your contact form. There are many good Captcha plugins available for WordPress, including Jetpack‘s Captcha module. We also have an informative post on The Benefits Of Using CAPTCHA In WordPress you should probably check out.

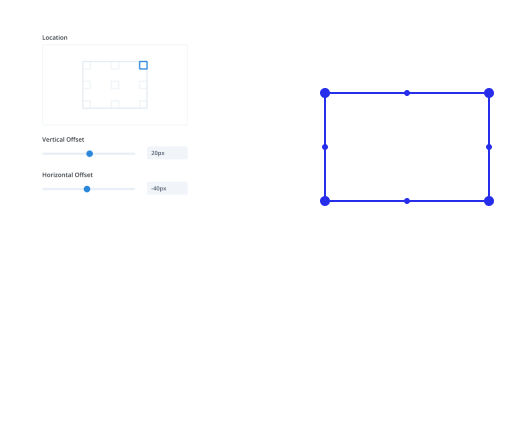

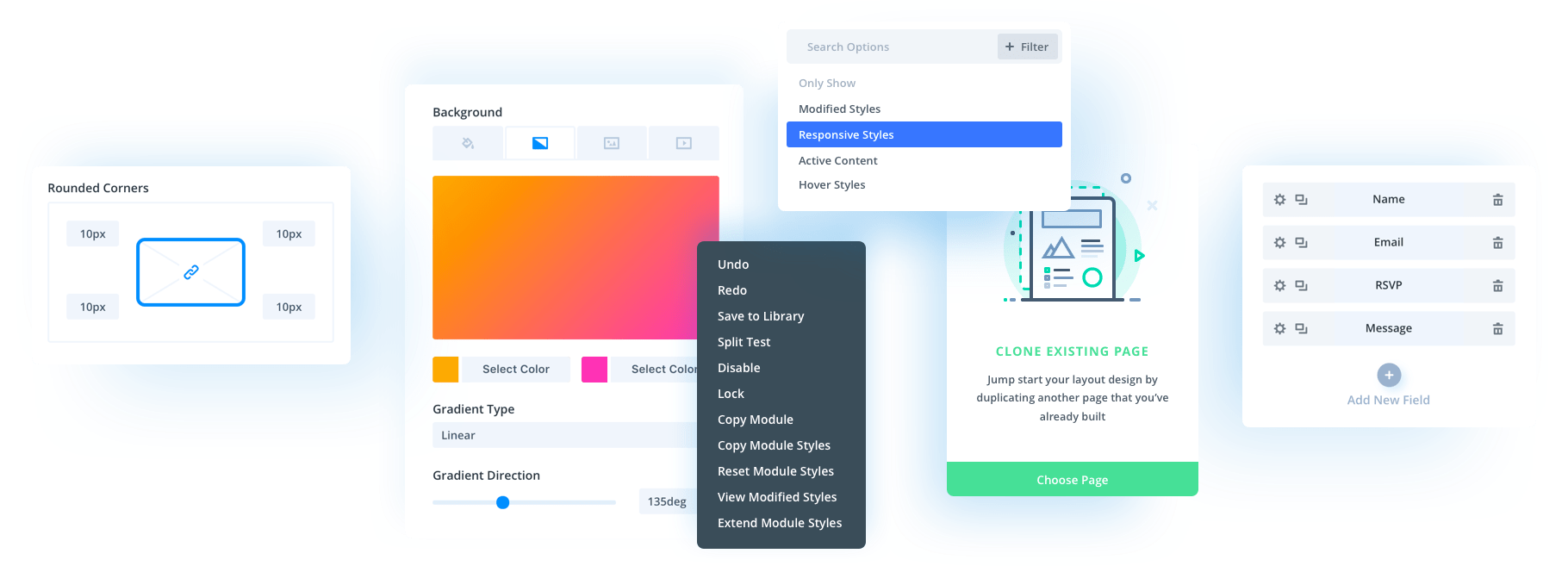

5. Frequently Change the HTML

This can mess with content scrapers that rely on predictable HTML markup to identify parts of your website. You can throw a wrench into this process by adding unexpected elements. Facebook used to do this by generating random element IDs, and you can too. This can frustrate content scrapers until they break. Keep in mind that this method can cause problems with things like updates and caching.

6. Obfuscation

You can obscure your data to make it less accessible by modifying your site’s files. I have come across a handful of websites that serve text as an image, which makes it much harder for human beings trying to manually copy and paste your text. You can also use CSS sprites to hide the names of images.

7. Don’t Post It!

The real world is your best bet when it comes to encryption. If you have information you absolutely need to be private, don’t put it on the internet. Not putting the information on the internet is truly the only way to keep your content safe. While the methods we mentioned here are all effective ways to prevent content scrapers from stealing your data – there are no guarantees. These methods make it more difficult, but not impossible.

Wrapping Up

Some security measures affect user experience. Keep in mind that you may have to make a compromise between safety and accessibility. It’s best to go after the low hanging fruit first. In many cases, you can find a plugin to help. Security plugins like WordFence, and Sucuri can automate rate limiting and blocking, among other things. The most effective methods I have come across involve:

- Using honeypots

- Obfuscating the code

- Rate limiting and other forms of detection

There are no bulletproof solutions to protect your site from content scrapers. The evolution of more sophisticated content scrapers arose as a response to savvy webmasters. It’s a back and forth battle that has been going on since the early 1990’s. Scrapers can fake nearly every aspect of a human user, which can make it difficult to figure out who the bad guys are. While this is daunting, most of the content scrapers you will deal with will be basic enough to easily stop.

Do you have any experience with malicious content scrapers? What did you do to stop them? Feel free to share in the comments section below.

Article thumbnail image by Lucky clover / shutterstock.com

I have had problems with scrapers in the past and I realized it was a battle that I didn’t have the time or energy to fight.

1. Many scrapers use your information and posts in hidden fields on their website. some even get sneaky and use the old tricks of showing one version of the page to the search engines — and one to humans (with your data missing, of course).

2. Others will change a few words here-and-there but you know it is obvious that the article originated on your site.

I have never used the DMCA method, but I have written to webmasters asking them to stop. Sometimes it worked, sometimes not.

My opinion though, is once you have posted an article, it is gone. And whether Google can see (or care) who originated the article is something I have never figured out. Imagine that I post an article, but the scraper gets indexed more often and faster. My article could potentially go up and get indexed on his site before mine.

One “mentor” told me. “Stop worrying about all the problems you can’t fix. Build your site, post good material, build a following, and move on. That is all you can hope for.”

I’ve tried to abide by that, but I’m guilty of putting some of my posts into copyscape just to see if they appear on any other site. 🙂

As to images, I know my images will be scraped and re-used. I don’t even consider that anymore because it is a given.

Thanks for the article and the ideas.

This is interesting, but if you block the scrapers, you might block the indexers like Google. I don’t think there is a good solution to this.

I too experienced with post-theft several times. Someone copy my contents and posted as their own. But I ignored it as none of the valuable sites or writers will go for such malpractices. Moreover than that Google can easily identify such stolen articles. Is it?

Interesting points you shared. Thank you very much.

I’m bookmarking this page Brenda for more ideas to use in my fight against scrapers and other online gremlins. 🙂

I had this problem about 2 years ago with content managers of Coaches copying my blog posts word for word and even using my images after removing my logo on their websites. I guess they thought a coach sitting in India wouldn’t notice her work on a website in UK or USA!

I also found my images being scrapped and made available for free download. One particular person ended up changing his host and domain registrar 3 times until he disappeared into Panama and the clouds in a matter of 3 days!

Anyway, nothing like a good DMCA notice, contacting the website host and domain registrar to rap the knuckles and get things removed.

It’s a tedious process so the next best thing is to use plugins that prevent copying, prevent hotlinks via the CPanel, use Wordfence for blocking bots and check via Google and other free online tools for any copying of content.

There is the plugin BLACKHOLE It really protects

Very nice article

How timely!

I wasn’t exactly “flattered” when my home page copy was recently plagiarized, word for word, via “Human Copy”. Unfortunately, there doesn’t seem to be much to do to protect against this particular method of “scraping”.

I did call the person out on it, and he made a few adjustments to his page copy, but decided to keep some of my best material, despite it having nothing to do with the rest of his content.

It sounds petty, but I wonder if these “human” scrapers even appreciate the challenge, the hard work, that writing can be.

That’s a copyright infringement and if you so desire, you could ask the host to take his site down.

Kathy, you have pointed it out to him. If he is still refusing to remove all your copyrigfhted material then it’s time to complain to his Hosting and get it removed. If that fails or you want a quicker route then a DMCA take down is the answer.

http://www.dmca.com/Takedowns.aspx?ad=xtkdnwrd&gclid=CN-ryuqeytMCFQ2eGwodB_sGQw

Is there any plugin that hide which software has the website? For example, if I want to hide I use wordpress platform and DIVI theme to my competitors, is there any tool that allow me?

Untile know I hide theme name changing name folder and other parameters, but, with filezilla, I still can see css classess and, through theme, which platform and theme is installed in my websites.

Thank you

Thanks for the article. You guys can add Share in Social Media to unlock the content. By that the original post will be ranked and reach to users.

Divi Theme – Login page, should also have options “Register with Social Media” in the registration.

Wow, that’s a great idea! How can a newbie like me achieve this result?